Ever wonder how search engines like Google can answer your queries by serving you relevant content and pages? Search engines get help from search crawlers, which are also called search bots, spiders, or web crawlers.

Learn how search bots work to better your search engine optimization (SEO) efforts!

What is a search crawler?

A search crawler is a program that browses and indexes pages across the Internet. Its mission is to index pages so search engines can retrieve relevant information and pages when a user searches for them. A search crawler is also called a search bot, spider, web crawler, or search crawler.

Why search bots are important for SEO

Search bots are critical for your SEO efforts. These spiders must be able to discover and crawl your site before your pages can appear in search engine results pages (SERPs).

That said, ensure you’re not blocking web crawlers from browsing the pages you want to rank.

How does a web crawler work?

Think of search crawlers as voyagers of the World Wide Web.

They start with a map of known URLs to go to. These spiders crawl these pages first. Next, they go to other pages that their initial list of URLs link to.

“We use a huge set of computers to crawl billions of pages on the web. The program that does the fetching is called Googlebot (also known as a crawler, robot, bot, or spider). Googlebot uses an algorithmic process to determine which sites to crawl, how often, and how many pages to fetch from each site.”

Because the World Wide Web has a vast collection of pages, search crawlers are selective about which content they prioritize. These bots follow policies on which pages to scan and how often they must crawl the pages again for updates.

Search bots gather information about pages they crawl — like voyagers taking notes about new places they’ve visited. They gather info like on-page copy, images and their alt text, and meta tags. Search engine algorithms will later process and store this info so that they can retrieve them when a user searches for them!

If you have a new website and no other page currently links to it, you can submit your URL to Google Search Console.

FAQs on search crawlers

Now that you know why search bots are important for SEO and how web crawlers work, let’s dive into a few FAQs about search crawlers:

What are examples of web crawlers?

Most search engines have their own search crawlers. Search engine giants like Google even have many crawlers that have particular areas of focus. Here are examples of web crawlers:

- Google Desktop: A crawler that simulates a desktop user

- Google Smartphone: A Googlebot that simulates a mobile phone user

- Bingbot: Bing’s web crawler that was launched in 2010

- Baidu Spider: The web crawler of search engine Baidu

- DuckDuckBot: The search bot of DuckDuckGo

- Yandex Bot: The search crawler of search engine Yandex

Should you always allow search crawlers access to your website?

Website owners like you want your pages to get indexed and appear in search results, so having search bots crawl your site is a good thing. However, always having search bots access your site can take up server resources and drive up your bandwidth costs.

In addition, you may have pages that you don’t want search engines to discover and serve to users, such as:

- Landing page for a campaign: You may have pay-per-click (PPC) landing pages that you only want targeted users to access the page.

- Internal search results page: Does your website have a search functionality? If it does, it may have a search results page that you don’t want to appear in Google’s SERPs as it may not contain useful content for Google’s searchers. You’d want them to land on your other helpful pages.

- Thank-you or Welcome page

- Admin log-in page

Pro tip

You can add a “noindex” tag on pages that you don’t want to show up in SERPs. A “disallow” tag on your page or in your robots.txt file also tells spiders not to crawl it.

What is crawl budget?

Crawl budget is the amount of time and resources that search bots will allocate to crawl a website. It includes:

- The number of pages to crawl

- How often the bots will crawl

- Acceptable server resources

Crawl budget is important as you don’t want search bots and site visitors to overload your site. If Google is slowing down your server, you can adjust your crawl rate limit on Google Search Console’s Crawl Rate Settings.

Optimize search crawls to boost your SEO strategy

Search bots crawl your site so that your pages are indexed and discoverable. Understanding what they are and how they work enables you to optimize your site to rank in SERPs and help your prospects find your business!

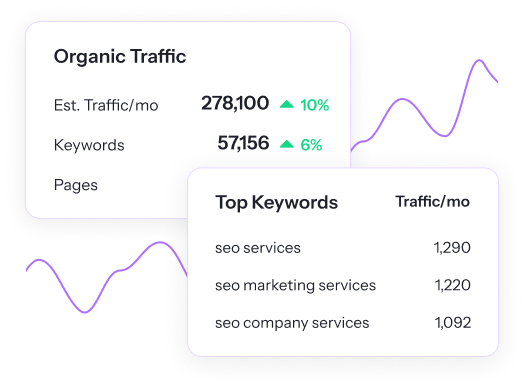

Ready to take your SEO strategy to the next level? Try SEO.com, a free SEO tool for keyword research, ranking tracking, and more!

Discover Your SEO Potential

Pinpoint opportunities on your website faster and increase your visibility online with SEO.com!

Cut Your SEO Time in Half

with your new favorite user-friendly SEO tool

Writers

Related Resources

- What is a Cached Page? A Beginner’s Guide to Cached Web Pages in SEO

- What is a Canonical URL? Ultimate Guide to Canonical Tags

- What is a CMS? Your Guide to Content Management Systems

- What is a Noindex Tag? A Beginner’s Guide to Noindex in SEO

- What is a SERP (Search Engine Results Page)?

- What is a Sitemap? Definition, Uses, and Tips

- What is a URL Slug? Tips for Creating SEO-Friendly URL Slugs

- What is Alt Text? + How to Craft Effective Alt Text for SEO

- What is an SEO Specialist? And How to Become (or Hire) One

- What is Cloaking in SEO? Your Ultimate Guide