Google and other search engines use bots — also called “crawlers” or “spiders” — to find pages on the Internet. These bots index content from websites so they appear in search engine results.

Crawlers often index these pages fairly quickly. However, they sometimes take longer to find specific pages due to the colossal volume of content floating around the web. They may also miss a few hot spots if your website is exceptionally large or complex to navigate.

Thankfully, an Extensible Markup Language (XML) sitemap simplifies this process. A markup language is a series of tags or codes outlining the text in an online document. An XML sitemap provides comprehensive details about a website’s URLs.

What does this sitemap entail? We’ll cover all you need to know about XML sitemaps, including:

- What they are

- What websites should include them

- What they should look like

- How they differ from HTML sitemaps

- Tips for implementing them

What Is an XML Sitemap?

An XML sitemap is a text file listing all pages of your website you want search engines to index. Its primary purpose is to ensure Google can:

- Locate and crawl all of these pages successfully.

- Understand the structure of your website and content.

- Stay up to date with new content.

Businesses looking to drive traffic want Google to crawl every key page of their websites. However, pages without internal links pointing to them are challenging to find. An XML sitemap facilitates the content discovery process. It gives those crawlers a convenient directory — or cheat sheet, if you will — of the pages you want them to see.

An XML sitemap might also include additional technical details, like:

- When the page was last updated or modified

- The page’s priority relative to others on the website

- How frequently the content will change

- Whether any versions of the URL exist in other languages

Remember that XML sitemaps are for search engine bots, not human users.

What Websites Should Have XML Sitemaps?

XML sitemaps are valuable tools for helping crawlers analyze your digital content. They’re particularly helpful for larger websites with hundreds of pages or extensive archives.

However, any website can benefit from implementing these maps. This strategy helps Google find your website’s most fundamental pages. It also brings new and updated content to search engines’ attention.

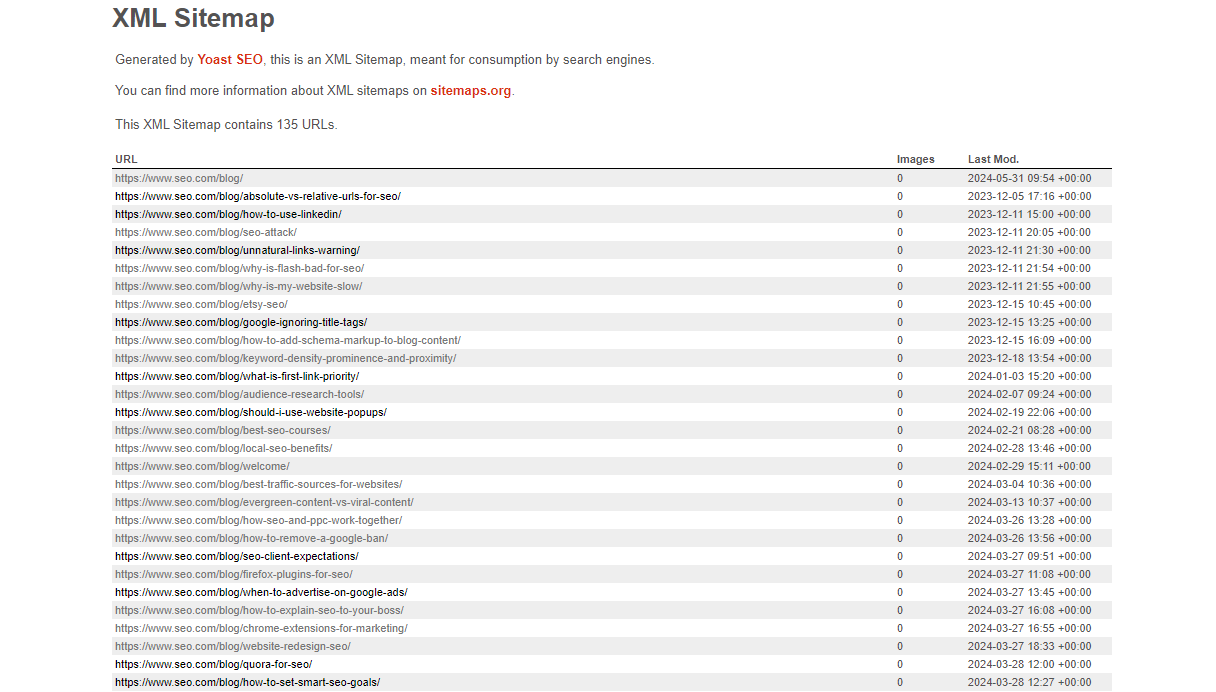

What Does an XML Sitemap Look Like?

XML sitemaps are intended for search engine crawlers. Naturally, they’re formatted in a language easy for computers to understand. Here are the main building blocks of an XML sitemap:

- Header: The XML header describes what search engines can expect from the file. It includes the XML version number and sometimes the character encodings

- <urlset>: The <urlset> comprises all the sitemap’s URLs. It specifies which version of the XML sitemap standard is used.

- <url> tag: This ingredient is most essential. It contains the details related to individual URLs or the <urlt> tag. Every URL definition should have a location tag (<loc>) This location is where the URL will direct search engine bots. The tag’s value should be the page’s full URL, including the protocol (e.g., HTTPS or HTTP).

Additionally, an XML sitemap might include these elements:

- (<changefreq>): This tag specifies how often your pages will be updated. This value could be hourly, daily, weekly, monthly, yearly, or never. You can even have an “always” value for pages with real-time analytics, like stock prices.

- (<lastmod>): This tag indicates the date a URL’s content was last modified

- (<priority>): The priority tag informs crawlers of your website’s most vital pages. It compares this priority to others on your site using a scale of 0.0 to 0.1.

While these three components are optional, they’re still helpful to include for search engines.

XML Sitemaps vs. HTML Sitemaps

Hypertext Markup Language (HTML) is another type of sitemap. How does it differ from its XML counterpart? Unlike XML sitemaps — which are designed for bots and crawlers — HTML sitemaps aim to make websites more navigable and user-friendly for humans.

These maps display a clear overview of your website’s layout. They help users find different pages quickly and seamlessly. Search engine bots also use HTML sitemaps for crawling. An HTML sitemap gives them a complete picture of your site, streamlining their job immensely.

Tips and Best Practices for Building XML Sitemaps

Now that you know the basics of XML sitemaps, let’s look at some pointers for building and incorporating them:

- Leverage automation tools. Manually building your sitemap following standard XML structure is one option. However, auditing software with built-in XML sitemap generators makes the process faster and simpler.

- Avoid duplicate content. Try to avoid including identical or extremely similar pages in your sitemap. That way, Google can focus on the originals and explore new and valuable content.

- Prioritize high-quality pages. Direct crawlers to your website’s most relevant, high-quality, and content-rich pages. These pages should be highly optimized, navigable, and mobile-friendly. They should be packed with unique and immersive content, like photos, graphics, and videos. They should also prompt engagement through reviews, testimonials, and comments. Site quality largely impacts your website’s search engine rankings.

- Omit “noindex” URLs from your sitemap. If you don’t want crawlers indexing certain pages, keep them out of your sitemap. When you submit an XML sitemap with “noindex” pages, you send contradictory messages to Google. Only include pages you want these bots to crawl and index.

- Submit your sitemap to Google. You can submit your XML sitemap from Google Search Console.

- Update modification times for significant changes only. Try to avoid updating page modification times unless you’re making substantial changes. If you consistently update them without offering new and valuable content, Google may start removing your time stamps.

- Create multiple sitemaps when necessary. XML sitemaps can’t exceed 50 megabytes or list over 50,000 URLs. Build multiple sitemaps if you find yourself surpassing these limits.

Optimize Your Website With Digital Marketing Services From SEO.com

A well-constructed XML sitemap plays a sizeable role in driving traffic and conversions. Partnering with an experienced digital marketing agency is another step toward this objective.

SEO.com is a trusted partner for businesses worldwide. From increasing your visibility and traffic from search engines to enhancing your online brand reputation, SEO brings many benefits to your business.

To learn more about how we can optimize your website and SEO strategy, check out the content on our blog for more handy guides and advice straight from our SEO experts!

Let’s Drive Results Together

$3bn+

revenue driven for clients

Add WebFX to your content marketing toolbox today

Get SEO Proposal$3bn+

revenue driven for clients

Add WebFX to your content marketing toolbox today

Get SEO Proposal